Table of contents :

O4-Mini OpenAI Model: Complete technical guide and official specifications

Ready to transform your business with AI?

Discover how AI can transform your business and improve your productivity.

What is the OpenAI o4-mini Model?

Official OpenAI o4-mini model announcement

The o4-mini OpenAI model was unveiled on April 16, 2025, as part of OpenAI's "o-series" focused on advanced reasoning capabilities. Unlike GPT models optimized for general text generation, the o4-mini model OpenAI prioritizes:

- Structured reasoning through chain-of-thought architecture

- Multimodal processing (text + images natively integrated)

- Cost efficiency with 10x lower pricing than o3

- Universal accessibility for free-tier ChatGPT users

Key technical specifications:

- Context window: 200,000 tokens

- Output capacity: Up to 100,000 tokens

- Knowledge cutoff: June 1, 2024

- Native multimodal support: Yes

- API availability: Chat Completions & Responses APIs

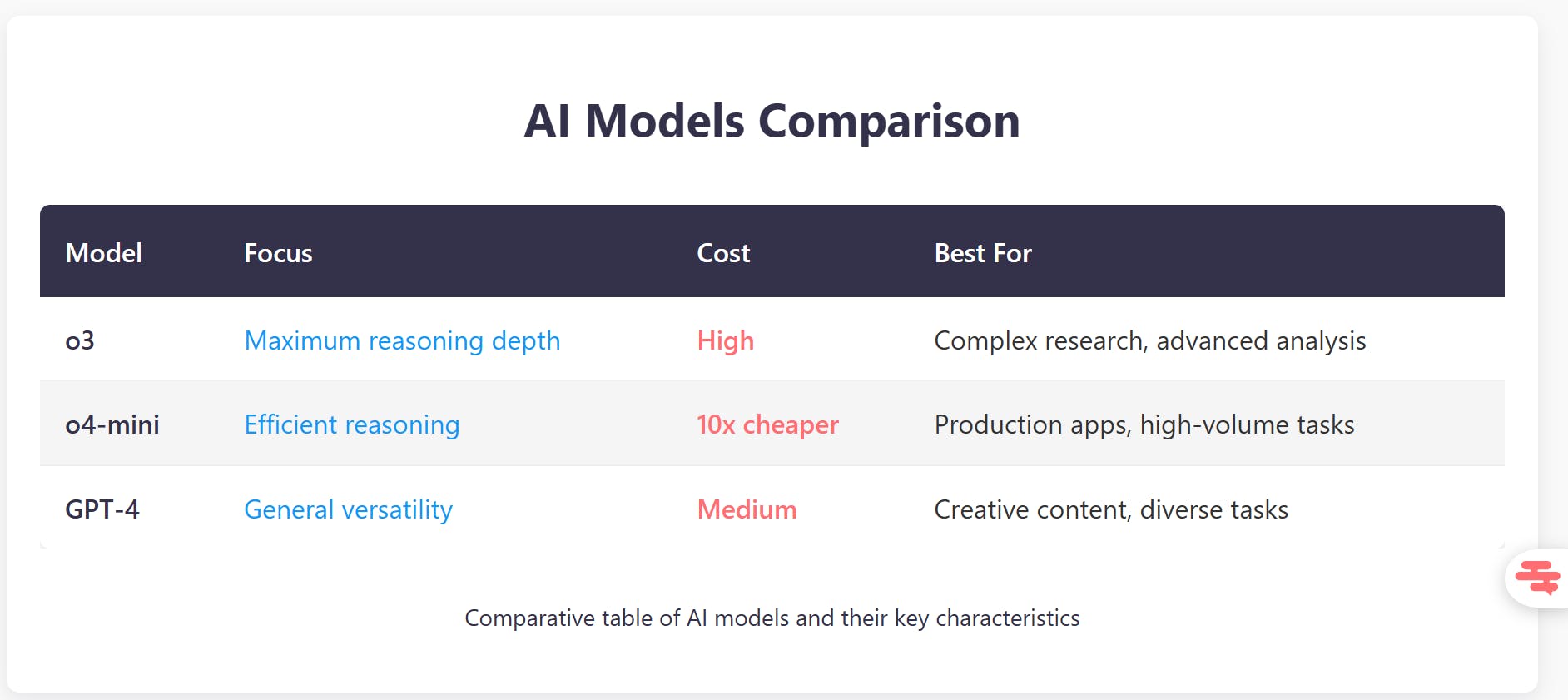

What is o4-mini OpenAI? Positioning in the ecosystem

The o4 mini OpenAI model bridges the gap between premium reasoning models (o3) and accessible AI tools:

This positioning makes o4-mini OpenAI the optimal choice for developers needing reasoning capabilities at scale.

OpenAI o4-mini model description: Core architecture

Chain-of-Thought processing

The OpenAI o4-mini model description centers on its revolutionary architecture:

1. Sequential reasoning steps

- Breaks complex problems into logical stages

- Transparent thought process (users can track each step)

- 35% reduction in reasoning errors vs. previous models

2. Deliberative alignment

- Analyzes ethical implications of requests

- Reduces false positives in content filtering

- Balances safety with usability

3. Multimodal integration

Unlike previous models treating images as separate inputs, o4-mini OpenAI processes visual information within its reasoning chain.

Result: The model interprets visual elements as part of its reasoning process, not as isolated data points.

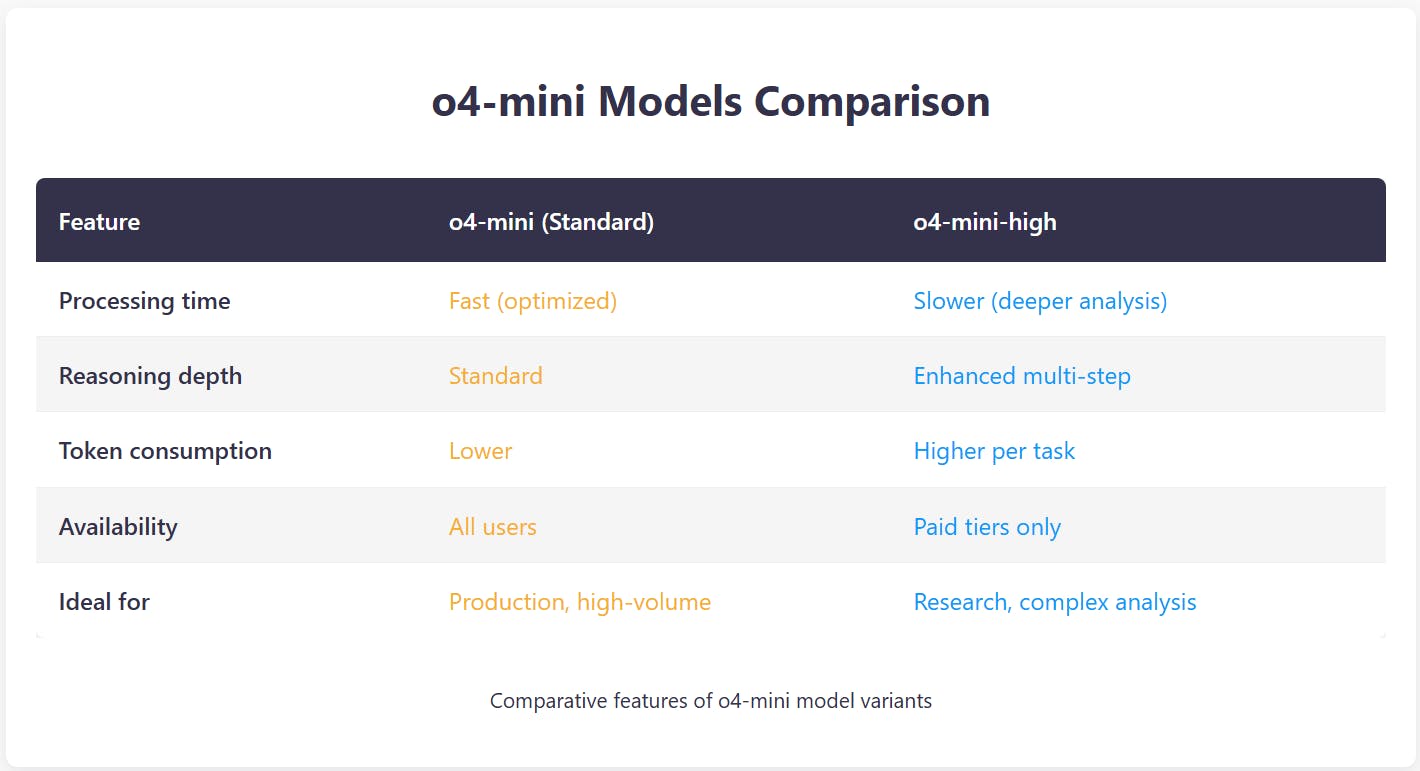

o4-mini-high OpenAI model: Premium variant explained

OpenAI o4-mini model details: Standard vs. High

The o4-mini-high OpenAI model offers enhanced analytical depth for complex tasks:

When to Choose o4-mini-high:

- Multi-layered problem-solving (e.g., legal document analysis)

- Long-context reasoning (analyzing 50+ page reports)

- Tasks requiring nuanced interpretation

When standard o4-mini suffices:

- Code generation and debugging

- Data extraction from documents

- Mathematical problem-solving

- Real-time applications

OpenAI o4-mini model capabilities: Technical breakdown

Official OpenAI o4-mini model capabilities

1. Mathematical reasoning

- AIME 2024 benchmark: Best-performing model in its class

- Solves competition-level math problems

- Shows step-by-step solution paths

Example use case:

Input: "Solve: If 3x + 7 = 25 and 2y - x = 5, find x and y"

Output: To solve the system

[ \begin{cases} 3x + 7 = 25,\ 2y - x = 5, \end{cases} ]

- From (3x + 7 = 25):

3x = 25 - 7 = 18)

(\displaystyle x = \frac{18}{3} = 6.)

- Substitute (x=6) into (2y - x = 5):

(2y - 6 = 5)

(2y = 11)

(\displaystyle y = \frac{11}{2}.)

Answer:

[ x = 6,\quad y = \frac{11}{2}. ]

2. Advanced coding

- Generates production-ready code with explanations

- Debugs complex logic errors

- Supports Python, web browsing integration, function calling

3. Visual analysis

The OpenAI o4-mini model what is it most known for? Its native visual reasoning:

- Sketch interpretation: Analyzes hand-drawn diagrams

- Chart analysis: Extracts trends from graphs

- Handwriting recognition: Processes handwritten formulas

- Whiteboard comprehension: Understands brainstorming sessions

Real-world application:

A healthcare provider uses o4-mini to analyze medical records combining text reports and diagnostic images, reducing analysis time by 60%.

4. Structured outputs

Supports JSON mode for reliable data extraction.

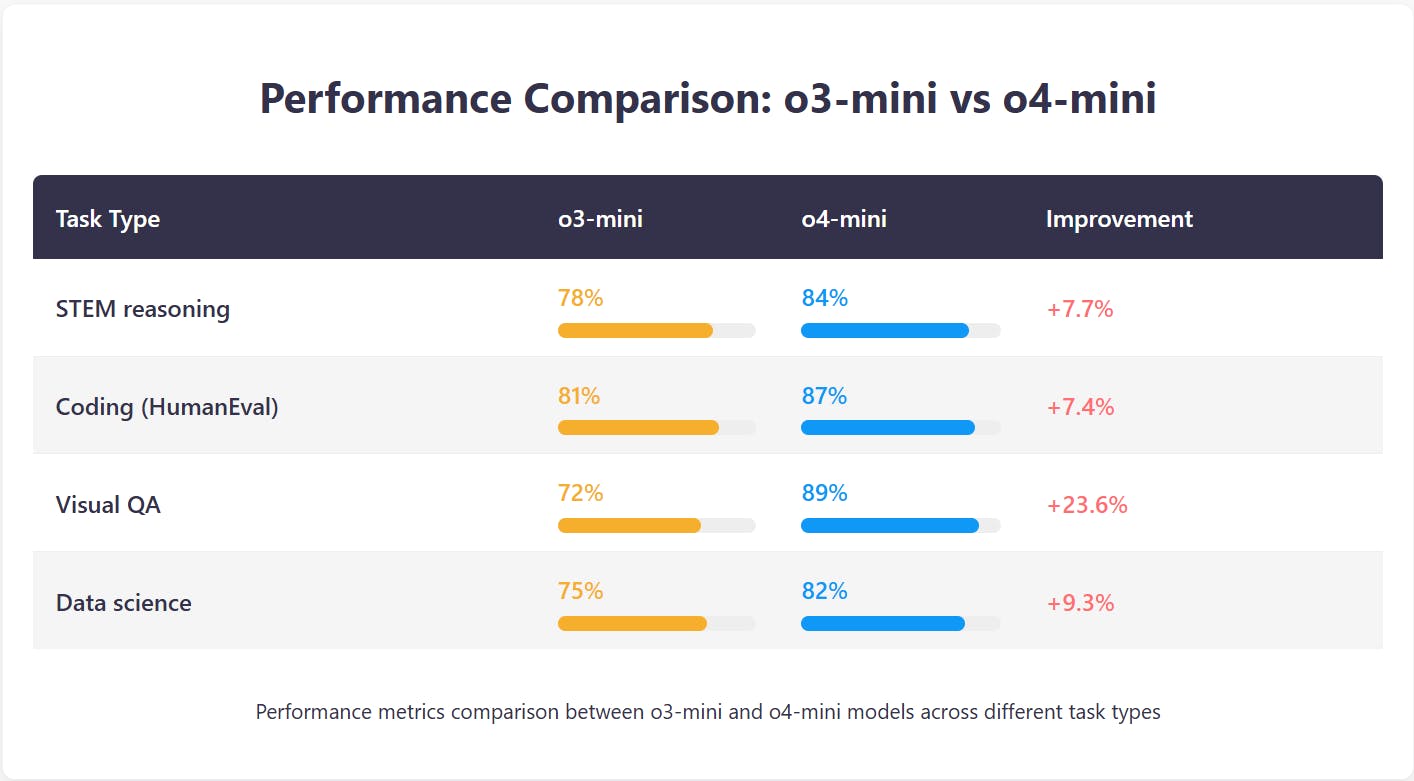

Official benchmarks and performance metrics

OpenAI o4-mini model official performance

Comparative benchmarks:

Energy efficiency:

- 40% reduced consumption vs. o3-mini

- 25% faster response times

- 30% lower token usage for equivalent tasks

Reliability metrics:

- Hallucination rate: 35% lower than GPT-4

- Reasoning error rate: 2.3% (vs. 3.8% for o3-mini)

- Context retention: 98.7% accuracy across 200K token window

Practical implementation guide

Getting started with o4-mini OpenAI

- API Integration

- Multimodal Workflow

- Function Calling for Automation

Industry-specific applications

Healthcare:

- Medical record analysis (text + imaging)

- Diagnostic assistance with reasoning transparency

- Regulatory compliance documentation

Finance:

- Real-time risk assessment

- Regulatory compliance verification

- Automated document analysis with audit trails

Utilities & Public services:

- Energy demand forecasting

- Infrastructure data analysis

- Predictive maintenance scheduling

Education:

- Personalized tutoring with step-by-step explanations

- Automated grading with detailed feedback

- Research assistance for students

How to Access o4-mini with Swiftask

Swiftask offers seamless o4-mini OpenAI integration with additional advantages:

1. Multi-Model Orchestration : Instead of using o4-mini in isolation, create intelligent workflows:

- Step 1: o4-mini analyzes raw data

- Step 2: GPT-4 generates creative content

- Step 3: Claude refines tone and style

- Step 4: Automated publishing

Expert Accompaniment

- Half-day onboarding session

- Custom agent creation for your specific use case

- Immediate ROI with operational agents

Enterprise integration

- Native Azure AD SSO

- Role-based access control (RBAC)

- Centralized monitoring dashboard

- Compliance-ready architecture

Example Workflow:

Document Upload → o4-mini extracts key data → GPT-4o writes executive summary → Mistral translates to 5 languages → Automated distribution

author

OSNI

Published

April 28, 2025

Ready to transform your business with AI?

Discover how AI can transform your business and improve your productivity.